Some RL ideas I am currently working on..

This will be up to date with some lag with this notion page.

Experiments Done:

config:

Starting model: r1-1.5b (SFT with 800k samples from full R1 model qwen2.5-math-base)

dataset: gsm8k (because easy dataset and I can run my experiments quickly)

max output length - 3200 tokens (constrained on a single H100/A100)

num_generations: 7 (constrained - more the better - but I am sure there is a point beyond it wont matter)

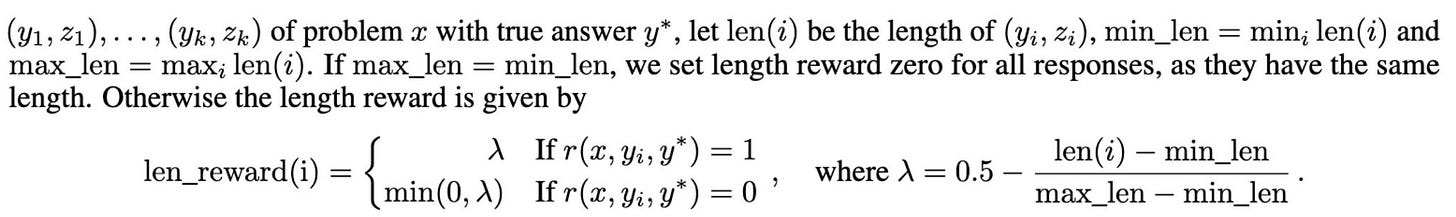

Length penalty (new addition directly inspired from Kimi1.5 paper)

def calculate_length_reward(response_len: int, min_len: int, max_len: int, is_correct: bool) -> float:

"""

Calculate length-based reward component

Args:

response_len: Length of current response

min_len: Minimum length across all responses

max_len: Maximum length across all responses

is_correct: Whether the response is correct

Returns:

Length reward value

"""

# If all responses have same length, return 0

if max_len == min_len:

return 0.0

# Calculate lambda parameter

lambda_val = 1.0 - (response_len - min_len)/(max_len - min_len)

# Return reward based on correctness

return lambda_val if is_correct else min(0, lambda_val)

from: https://github.com/sathvikask0/r1-distilled-RL/blob/main/3200_7.py#L76

All this was under 10 GPU hours of a single H100 - so less than $25 on most on-demand GPU providers.

link to LoRA checkpoint: https://huggingface.co/sathvikask/r1-1.5b-RL-gsm8k…

added steps to run inference as well on the repo: https://github.com/sathvikask0/r1-distilled-RL…

training stopped at 418 steps (Because it was a preemptive job), I am currently working on understanding where it succeeds, what are its failure modes, want to do some deep analysis before starting any new experiments.

Experiments I will DO (Future) :

How much further can simple RL (GRPO + accuracy based rewards) improve the performance of r1-1.5b (it's distilled using 800k samples from full R1) by training using data from the OpenAI Math dataset.

How will its performance improve on related and unrelated benchmarks proving its generalisation capabilities?

Once this is done, we can use curriculum learning which trains models on easy questions first and then moves on to hard questions. (kimi-1.5 paper)

Easy vs hard is decided by its pass rate on 10 times 0.6 temp sampling.

Keep track of pass rate after each iteration and sample from the dataset using pass rate, as soon as pass rate becomes 100% drop it from the dataset.

We want the model to consistently produce right answers.

Given GRPO samples the model 5-10 times for a question, pass rate comes free of cost.

Use r1-7b as a reward model alongside simple accuracy rewards, like a teacher scoring the answer, it is prone to reward hacking but because it is a thinking model and a bigger model than 1.5b it should be hard to hack.

Using this method, we can potentially beat 7b’s perf assuming this 7b model was also trained using RL first; beating only SFT 7b is not a big deal.

The model linked above is NOT trained with RL, its only SFT trained.

If we can make the previous point work, we now have a flywheel where you use a bigger model trained with RL as a reward model + accuracy rewards and use it to train a smaller model with better perf than the bigger model (student beating teacher with teacher’s advice) and then use this smaller model to generate high quality data for your next version base models and SFT tuning.

More ideas:

Rewards should be like dopamine hits, if the model solves the same level of question (based on pass rate) multiple times, its reward drops proportionally, but it gets a proportionally higher reward on solving a tougher question, but the loop repeats - leading to model getting higher rewards only if it constantly improves.

Information synthesis, humans when reading across different fields, can quickly synthesize breakthrough insights simply because of connecting ideas from different fields and real world feedback - once the model reaches a decent understanding of multiple fields, we need to train it on cross field tasks so that it can synthesize the knowledge of the base model.

Unlocking the potential of the base model.

Current models aren’t able to do this because they have no real world feedback and RL tasks that they are being trained on, have not reached this point yet.

Adding a memory module to LLMs, using the ideas of surprise similar to what was mentioned in the Titans paper by google.

Dopamine works this way as well, expected situation/reward vs what actually happened, if it's significantly different it will be noted.